$$

\newcommand{\LetThereBe}[2]{\newcommand{#1}{#2}}

\newcommand{\letThereBe}[3]{\newcommand{#1}[#2]{#3}}

% Declare mathematics (so they can be overwritten for PDF)

\newcommand{\declareMathematics}[2]{\DeclareMathOperator{#1}{#2}}

\newcommand{\declareMathematicsStar}[2]{\DeclareMathOperator*{#1}{#2}}

% striked integral

\newcommand{\avint}{\mathop{\mathchoice{\,\rlap{-}\!\!\int}

{\rlap{\raise.15em{\scriptstyle -}}\kern-.2em\int}

{\rlap{\raise.09em{\scriptscriptstyle -}}\!\int}

{\rlap{-}\!\int}}\nolimits}

$$

$$

% Simply for testing

\LetThereBe{\foo}{\textrm{FIXME: this is a test!}}

% Font styles

\letThereBe{\mcal}{1}{\mathcal{#1}}

% Sets

\LetThereBe{\C}{\mathbb{C}}

\LetThereBe{\R}{\mathbb{R}}

\LetThereBe{\Z}{\mathbb{Z}}

\LetThereBe{\N}{\mathbb{N}}

\LetThereBe{\Im}{\mathrm{Im}}

\LetThereBe{\Re}{\mathrm{Re}}

% Sets from PDEs

\LetThereBe{\boundary}{\partial}

\letThereBe{\closure}{1}{\overline{#1}}

\letThereBe{\contf}{2}{C^{#2}(#1)}

\letThereBe{\compactContf}{2}{C_c^{#2}(#1)}

\letThereBe{\ball}{2}{B\brackets{#1, #2}}

\letThereBe{\closedBall}{2}{B\parentheses{#1, #2}}

\LetThereBe{\compactEmbed}{\subset\subset}

\letThereBe{\inside}{1}{#1^o}

\LetThereBe{\neighborhood}{\mcal O}

\letThereBe{\neigh}{1}{\neighborhood \brackets{#1}}

% Basic notation - vectors and random variables

\letThereBe{\vi}{1}{\boldsymbol{#1}} %vector or matrix

\letThereBe{\dvi}{1}{\vi{\dot{#1}}} %differentiated vector or matrix

\letThereBe{\vii}{1}{\mathbf{#1}} %if \vi doesn't work

\letThereBe{\dvii}{1}{\vii{\dot{#1}}} %if \dvi doesn't work

\letThereBe{\rnd}{1}{\mathup{#1}} %random variable

\letThereBe{\vr}{1}{\mathbf{#1}} %random vector or matrix

\letThereBe{\vrr}{1}{\boldsymbol{#1}} %random vector if \vr doesn't work

\letThereBe{\dvr}{1}{\vr{\dot{#1}}} %differentiated vector or matrix

\letThereBe{\vb}{1}{\pmb{#1}} %#TODO

\letThereBe{\dvb}{1}{\vb{\dot{#1}}} %#TODO

\letThereBe{\oper}{1}{\mathsf{#1}}

% Basic notation - general

\letThereBe{\set}{1}{\left\{#1\right\}}

\letThereBe{\brackets}{1}{\left( #1 \right)}

\letThereBe{\parentheses}{1}{\left[ #1 \right]}

\letThereBe{\dom}{1}{\mcal{D}\, \brackets{#1}}

\letThereBe{\complexConj}{1}{\overline{#1}}

% Special symbols

\LetThereBe{\vf}{\varphi}

\LetThereBe{\ve}{\varepsilon}

\LetThereBe{\tht}{\theta}

\LetThereBe{\after}{\circ}

% Shorthands

\LetThereBe{\xx}{\vi x}

\LetThereBe{\yy}{\vi y}

\LetThereBe{\XX}{\vi X}

\LetThereBe{\AA}{\vi A}

\LetThereBe{\bb}{\vi b}

\LetThereBe{\vvf}{\vi \vf}

\LetThereBe{\ff}{\vi f}

\LetThereBe{\gg}{\vi g}

% Basic functions

\letThereBe{\absval}{1}{\left| #1 \right|}

\LetThereBe{\id}{\mathrm{id}}

\letThereBe{\floor}{1}{\left\lfloor #1 \right\rfloor}

\letThereBe{\ceil}{1}{\left\lceil #1 \right\rceil}

\declareMathematics{\im}{im} %image

\declareMathematics{\tg}{tg}

\declareMathematics{\sign}{sign}

\declareMathematics{\card}{card} %cardinality

\declareMathematics{\exp}{exp}

\letThereBe{\indicator}{1}{\mathbb{1}_{#1}}

\declareMathematics{\arccot}{arccot}

\declareMathematics{\complexArg}{arg}

% Operators - Analysis

\LetThereBe{\hess}{\nabla^2}

\LetThereBe{\lagr}{\mcal L}

\LetThereBe{\lapl}{\Delta}

\declareMathematics{\grad}{grad}

\declareMathematics{\Dgrad}{D}

\LetThereBe{\gradient}{\nabla}

\LetThereBe{\jacobi}{\nabla}

\LetThereBe{\d}{\mathrm{d}}

\letThereBe{\partialDeriv}{2}{\frac {\partial #1} {\partial #2}}

\letThereBe{\partialOp}{1}{\frac {\partial} {\partial #1}}

\letThereBe{\deriv}{2}{\frac {\d #1} {\d #2}}

\letThereBe{\derivOp}{1}{\frac {\d} {\d #1}\,}

% Useful commands

\letThereBe{\onTop}{2}{\mathrel{\overset{#2}{#1}}}

\letThereBe{\onBottom}{2}{\mathrel{\underset{#2}{#1}}}

\letThereBe{\tOnTop}{2}{\mathrel{\overset{\text{#2}}{#1}}}

\LetThereBe{\EQ}{\onTop{=}{!}}

\LetThereBe{\letDef}{:=} %#TODO: change the symbol

\LetThereBe{\isPDef}{\onTop{\succ}{?}}

% Optimization

\declareMathematicsStar{\argmin}{argmin}

\declareMathematicsStar{\argmax}{argmax}

\declareMathematics{\prox}{prox}

\declareMathematics{\loss}{loss}

\declareMathematics{\supp}{supp}

\letThereBe{\Supp}{1}{\supp\brackets{#1}}

\LetThereBe{\Koop}{\mcal K}

\letThereBe{\oneToN}{1}{\left[#1\right]}

\LetThereBe{\constraint}{\text{s.t.}\;}

% PDEs

% \avint -- defined in format-respective tex files

\LetThereBe{\fundamental}{\Phi}

\LetThereBe{\fund}{\fundamental}

\letThereBe{\normaDeriv}{1}{\partialDeriv{#1}{\vec{n}}}

\letThereBe{\volAvg}{2}{\avint_{\ball{#1}{#2}}}

\LetThereBe{\VolAvg}{\volAvg{x}{\ve}}

\letThereBe{\surfAvg}{2}{\avint_{\boundary \ball{#1}{#2}}}

\LetThereBe{\SurfAvg}{\surfAvg{x}{\ve}}

\LetThereBe{\corrF}{\varphi^{\times}}

\LetThereBe{\greenF}{G}

\letThereBe{\reflect}{1}{\tilde{#1}}

\letThereBe{\unitBall}{1}{\alpha(#1)}

\LetThereBe{\conv}{*}

\letThereBe{\dotP}{2}{#1 \cdot #2}

\letThereBe{\translation}{1}{\tau_{#1}}

\declareMathematics{\dist}{dist}

\letThereBe{\regularizef}{1}{\eta_{#1}}

\letThereBe{\fourier}{1}{\widehat{#1}}

\letThereBe{\ifourier}{1}{\check{#1}}

\LetThereBe{\fourierOp}{\mcal F}

\letThereBe{\Norm}{1}{\absval{#1}}

$$

$$

% Linear algebra

\letThereBe{\norm}{1}{\left\lVert #1 \right\rVert}

\letThereBe{\scal}{2}{\left\langle #1, #2 \right\rangle}

\letThereBe{\avg}{1}{\overline{#1}}

\letThereBe{\Avg}{1}{\bar{#1}}

\letThereBe{\linspace}{1}{\mathrm{lin}\set{#1}}

\letThereBe{\algMult}{1}{\mu_{\mathrm A} \brackets{#1}}

\letThereBe{\geomMult}{1}{\mu_{\mathrm G} \brackets{#1}}

\LetThereBe{\Nullity}{\mathrm{nullity}}

\letThereBe{\nullity}{1}{\Nullity \brackets{#1}}

\LetThereBe{\nulty}{\nu}

% Linear algebra - Matrices

\LetThereBe{\tr}{\top}

\LetThereBe{\Tr}{^\tr}

\LetThereBe{\pinv}{\dagger}

\LetThereBe{\Pinv}{^\dagger}

\LetThereBe{\Inv}{^{-1}}

\LetThereBe{\ident}{\vi{I}}

\letThereBe{\mtr}{1}{\begin{pmatrix}#1\end{pmatrix}}

\letThereBe{\bmtr}{1}{\begin{bmatrix}#1\end{bmatrix}}

\declareMathematics{\trace}{tr}

\declareMathematics{\diagonal}{diag}

$$

$$

% Statistics

\LetThereBe{\noise}{\mathscr{N}}

\LetThereBe{\normalDist}{\mathcal N}

\letThereBe{\normalD}{1}{\normalDist \brackets{#1}}

\letThereBe{\NormalD}{2}{\normalDist \brackets{#1, #2}}

\LetThereBe{\iid}{\overset{\text{iid}}{\sim}}

\LetThereBe{\ind}{\overset{\text{ind}}{\sim}}

\LetThereBe{\condp}{\,\vert\,}

\LetThereBe{\acov}{\gamma}

\LetThereBe{\acf}{\rho}

\LetThereBe{\stdev}{\sigma}

\LetThereBe{\procMean}{\mu}

\LetThereBe{\procVar}{\stdev^2}

\declareMathematics{\variance}{var}

\letThereBe{\Variance}{1}{\variance \brackets{#1}}

\declareMathematics{\cov}{cov}

\declareMathematics{\corr}{cor}

\declareMathematics{\expectedValue}{\mathbb{E}}

\letThereBe{\expect}{1}{\expectedValue #1}

\letThereBe{\Expect}{1}{\expectedValue \brackets{#1}}

\letThereBe{\WN}{2}{\mathrm{WN}\brackets{#1,#2}}

\declareMathematics{\uniform}{Unif}

\LetThereBe{\att}{_t} %at time

\letThereBe{\estim}{1}{\hat{#1}}

\letThereBe{\predict}{3}{\estim {\rnd #1}_{#2 | #3}}

\letThereBe{\periodPart}{3}{#1+#2-\ceil{#2/#3}#3}

\letThereBe{\infEstim}{1}{\tilde{#1}}

\LetThereBe{\backs}{\oper B}

\LetThereBe{\diff}{\oper \Delta}

\LetThereBe{\BLP}{\oper P}

\LetThereBe{\arPoly}{\Phi}

\letThereBe{\ArPoly}{1}{\arPoly\brackets{#1}}

\LetThereBe{\maPoly}{\Theta}

\letThereBe{\MaPoly}{1}{\maPoly\brackets{#1}}

\letThereBe{\ARmod}{1}{\mathrm{AR}\brackets{#1}}

\letThereBe{\MAmod}{1}{\mathrm{MA}\brackets{#1}}

\letThereBe{\ARMA}{2}{\mathrm{ARMA}\brackets{#1, #2}}

\letThereBe{\sARMA}{3}{\mathrm{ARMA}\brackets{#1}\brackets{#2}_{#3}}

\letThereBe{\SARIMA}{3}{\mathrm{ARIMA}\brackets{#1}\brackets{#2}_{#3}}

\letThereBe{\ARIMA}{3}{\mathrm{ARIMA}\brackets{#1, #2, #3}}

\LetThereBe{\pacf}{\alpha}

\letThereBe{\parcorr}{3}{\rho_{#1 #2 | #3}}

\LetThereBe{\likely}{\mcal L}

\letThereBe{\Likely}{1}{\likely\brackets{#1}}

\LetThereBe{\loglikely}{\mcal l}

\letThereBe{\Loglikely}{1}{\loglikely \brackets{#1}}

\LetThereBe{\CovMat}{\Gamma}

\LetThereBe{\covMat}{\vi \CovMat}

\LetThereBe{\rcovMat}{\vrr \CovMat}

\LetThereBe{\AIC}{\mathrm{AIC}}

\LetThereBe{\BIC}{\mathrm{BIC}}

\LetThereBe{\AICc}{\mathrm{AIC}_c}

\LetThereBe{\nullHypo}{H_0}

\LetThereBe{\altHypo}{H_1}

\LetThereBe{\rve}{\rnd \ve}

\LetThereBe{\rtht}{\rnd \theta}

\LetThereBe{\rX}{\rnd X}

\LetThereBe{\rY}{\rnd Y}

\LetThereBe{\rZ}{\rnd Z}

\LetThereBe{\vrZ}{\vr Z}

\LetThereBe{\vrY}{\vr Y}

\LetThereBe{\vrX}{\vr X}

% Different types of convergence

\LetThereBe{\inDist}{\onTop{\to}{d}}

\letThereBe{\inDistWhen}{1}{\onBottom{\onTop{\longrightarrow}{d}}{#1}}

\LetThereBe{\inProb}{\onTop{\to}{P}}

\letThereBe{\inProbWhen}{1}{\onBottom{\onTop{\longrightarrow}{P}}{#1}}

\LetThereBe{\inMeanSq}{\onTop{\to}{L^2}}

\letThereBe{\inMeanSqWhen}{1}{\onBottom{\onTop{\longrightarrow}{L^2}}{#1}}

\LetThereBe{\convergeAS}{\tOnTop{\to}{a.s.}}

\letThereBe{\convergeASWhen}{1}{\onBottom{\tOnTop{\longrightarrow}{a.s.}}{#1}}

$$

$$

% Game Theory

\LetThereBe{\doms}{\succ}

\LetThereBe{\isdom}{\prec}

\letThereBe{\OfOthers}{1}{_{-#1}}

\LetThereBe{\ofOthers}{\OfOthers{i}}

\LetThereBe{\pdist}{\sigma}

\letThereBe{\domGame}{1}{G_{DS}^{#1}}

\letThereBe{\ratGame}{1}{G_{Rat}^{#1}}

\letThereBe{\bestRep}{2}{\mathrm{BR}_{#1}\brackets{#2}}

\letThereBe{\perf}{1}{{#1}_{\mathrm{perf}}}

\LetThereBe{\perfG}{\perf{G}}

\letThereBe{\imperf}{1}{{#1}_{\mathrm{imp}}}

\LetThereBe{\imperfG}{\imperf{G}}

\letThereBe{\proper}{1}{{#1}_{\mathrm{proper}}}

\letThereBe{\finrep}{2}{{#2}_{#1{\text -}\mathrm{rep}}} %T-stage game

\letThereBe{\infrep}{1}{#1_{\mathrm{irep}}}

\LetThereBe{\repstr}{\tau} %strategy in a repeated game

\LetThereBe{\emptyhist}{\epsilon}

\letThereBe{\extrep}{1}{{#1^{\mathrm{rep}}}}

\letThereBe{\avgpay}{1}{#1^{\mathrm{avg}}}

\LetThereBe{\succf}{\pi} %successor function

\LetThereBe{\playf}{\rho} %player function

\LetThereBe{\actf}{\chi} %action function

% ODEs

\LetThereBe{\timeInt}{\mcal I}

\LetThereBe{\stimeInt}{\mcal J}

\LetThereBe{\Wronsk}{\mcal W}

\letThereBe{\wronsk}{1}{\Wronsk \parentheses{#1}}

\LetThereBe{\prufRadius}{\rho}

\LetThereBe{\prufAngle}{\vf}

\LetThereBe{\weyr}{\sigma}

\LetThereBe{\linDifOp}{\mathsf{L}}

\LetThereBe{\Hurwitz}{\vi H}

\letThereBe{\hurwitz}{1}{\Hurwitz \brackets{#1}}

$$

A Stochastic Point of View

The observed time series \(\rnd X_1, \dots,\rnd X_n\) is a sequence of numbers and our aim is to understand the mechanism that generated the series and make use of it. Therefore we need a mathematical model, then capture randomness, by which we mean a model for uncertainty and/or limited knowledge. Partial information also will need to be addressed. The observed data are seen as a sequence of realizations of random variables and as such we need to study them all together, including their relationships.

Definition 2.1 A stochastic process is a family of random variables \(\set{\rnd X_t : t \in T}\) defined on a probability space \((\Omega, \mcal{A}, P)\).

Where in the Definition 2.1 the meaning of used symbols is as follows:

- \(T\) is the index set;

- \(\set{\rnd X_t : t \in T}\) can be seen as a function of \(t\) and \(\omega\), i.e., \(\set{\rnd X (t, \omega) : t \in T, \omega \in \Omega}\);

- for a fixed \(t \in T, \; \rnd X_t = \rnd X_t (\cdot) = \set{\rnd X_t (\omega) : \omega \in \Omega}\) is a random variable defined on \(\Omega\), i.e. the process is seen as a collection of random variables indexed by \(T\);

- for a fixed \(\omega \in \Omega, \; \rnd X = \rnd X(\omega) = \set{\rnd X_t (\omega) : t \in T}\) is a function on \(T\), i.e. the process is seen as a random function.

As such, we call a realization of \(\set{\rnd X_t : t \in T}\) a sample path/trajectory/realization.

Types of stochastic processes

There are many types of stochastic processes, but most importantly (for us):

- time series (discrete-time processes)

- \(T = \Z\) (or \(T \subset \Z\), e.g., \(T = \N\));

- the observed time series is seen as a realization of the stochastic process \(\set{\rnd X_t : t \in \Z} = \set{\dots , \rnd X_{-1}, \rnd X_0, \rnd X_1, \dots}\);

- a time series is a random sequence and a sequence of random variables;

- continuous-time processes: \(T = \R\) or \(T = [a, b]\) (random function);

- spatially indexed processes: \(T = \R^d\) or \(T \subset \R^d\) (random fields);

- processes on lattices: \(T = \Z^d, \dots\);

- spatio-temporal processes: \(T = \R^d \times [a, b], \dots\);

- etc.

Although we will see other types of index sets in other courses, in our lectures we will stick to time series only (where time is discretized).

Discrete sampling of time series

Time series are random sequences, i.e., \(T = \Z\), where the discretization can be due to discrete sampling of a continuous-time process, e.g. closing prices of a share, electrical signal in telecommunications, aggregation of a continuous time process, e.g. daily precipitation, monthly electricity production, or a discrete realization, e.g. regularly repeated medical experiment.

Distribution of a stochastic process

We can define a finite-dimensional distributions for all \(k \in \N, t_1, \dots, t_k \in T\) as \[

F_{t_1,\dots,t_k} (x_1, \dots, x_k) = P(\rnd X_{t_1} \leq x_1, \dots, \rnd X_{t_k} \leq x_k).

\]

Here, we took a random vector \(\rnd X_{t_1}, \dots, \rnd X_{t_k}\) and looked at its joint distribution.

A system of distribution functions \(\set{F_{t_1, \dots, t_k}: \; t_1, \dots, t_k \in T, k \in \N}\) is called consistent, if it has following properties

- \(\lim_{x_{k+1} \to \infty} F_{t_1, \dots, t_k, t_{k+1}}(x_1, \dots, x_k, x_{k+1}) = F_{t_1, \dots, t_k}(x_1, \dots, x_k)\);

- \(F_{t_1, \dots, t_k}(x_1, \dots, x_k) = F_{t_{i_1}, \dots, t_{i_k}}(x_{i_1}, \dots, x_{i_k})\) for all permutations \((i_1, \dots, i_k)\) of \((1, \dots, k)\).

A stochastic process has always a consistent system of distributions.

Theorem 2.1 (Daniell–Kolmogorov) Let \(\set{ F_{t_1,\dots,t_k} : t_1,\dots, t_k \in T , k \in \N}\) be a consistent system of distribution functions. Then there exists a stochastic process \(\set{\rnd X_t : t \in T}\) such that for all \(k \in \N, t_1, \dots, t_k \in T\) the joint distribution function of \((X_{t_1} , \dots, X_{t_k})\) is \(F_{t_1, \dots,t_k}\).

For the distribution of a stochastic process, it holds that a process whose finite-dimensional distributions are all multivariate normal is called Gaussian. Often, much information is contained in means, variances and covariances and thus, focusing on the first and second moments is often sufficient. Moreover, if the joint distributions are multivariate normal, the first and second moments completely determine the joint distribution

Definition 2.2 (Mean, autocovariance, autocorrelation) For a stochastic process, we define:

- mean function \[

\procMean_t = \expect \rnd X_t , t \in \Z;

\]

- autocovariance function (acov) \[

\acov(s, t) = \cov (\rnd X_s , \rnd X_t) = \Expect{(\rnd X_s − \procMean_s )(\rnd X_t − \procMean_t)}, \; s, t \in \Z;

\]

- autocorrelation function (acf) \[

\acf(s, t) = \corr (\rnd X_s ,\rnd X_t) = \frac{\cov (\rnd X_s, \rnd X_t)} {\sqrt{\variance \rnd X_s \variance \rnd X_t}} , \; s, t \in \Z.

\]

Examples

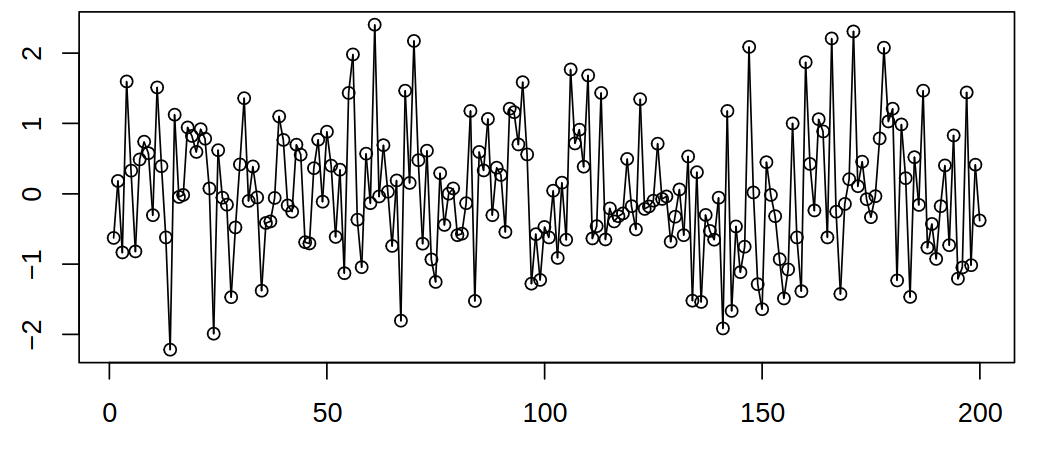

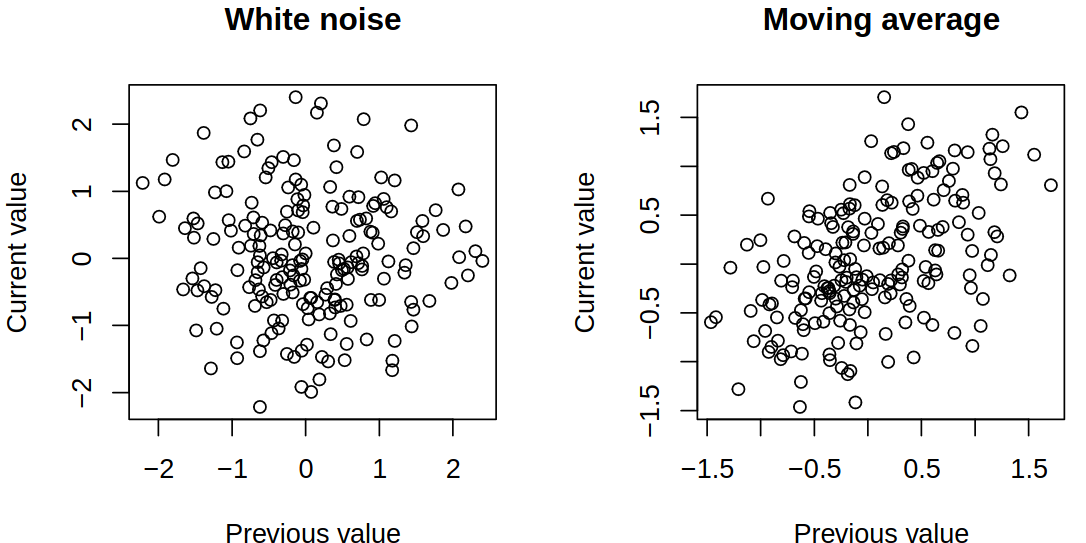

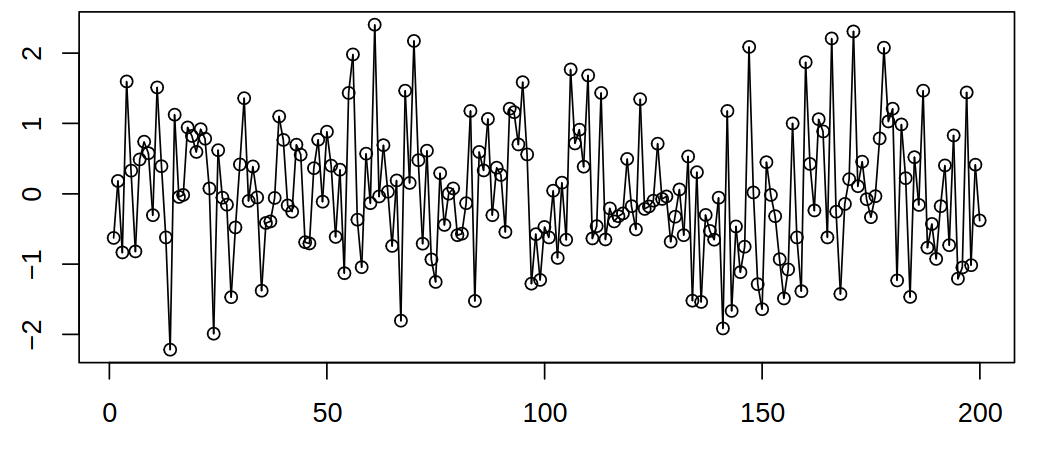

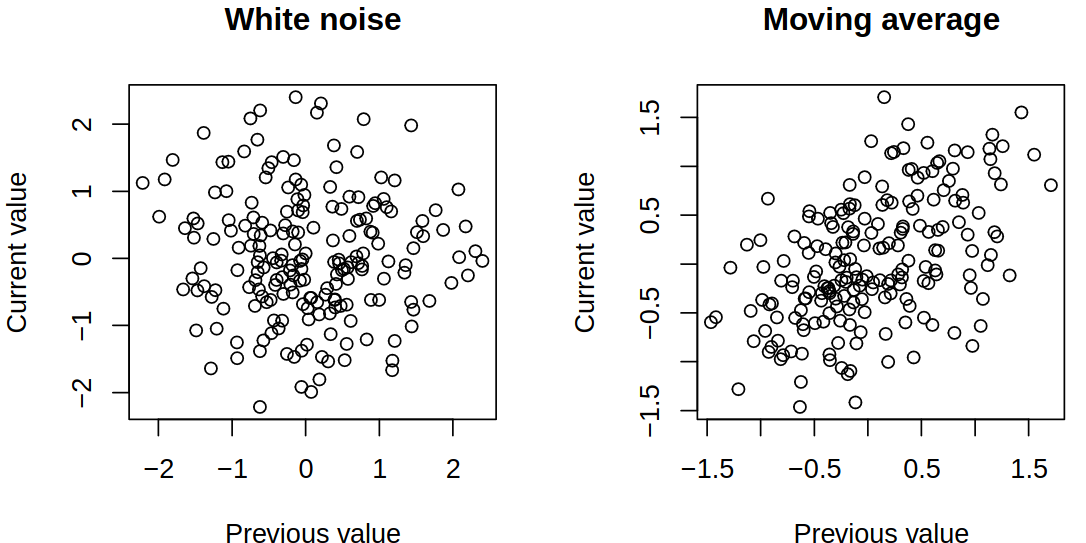

White noise process

Let \(\set{\rve_t : t \in \Z}\) be a sequence of uncorrelated random variables with mean \(0\) and variance \(\procVar\). That is, \(\expect{\rnd X_t} = 0, \variance \rnd X_t = \procVar\), \(\cov(\rnd X_s , \rnd X_t) = 0, s \neq t\) and we introduce notation \(\set{\rve_t} \sim \WN{0}{\procVar}\).

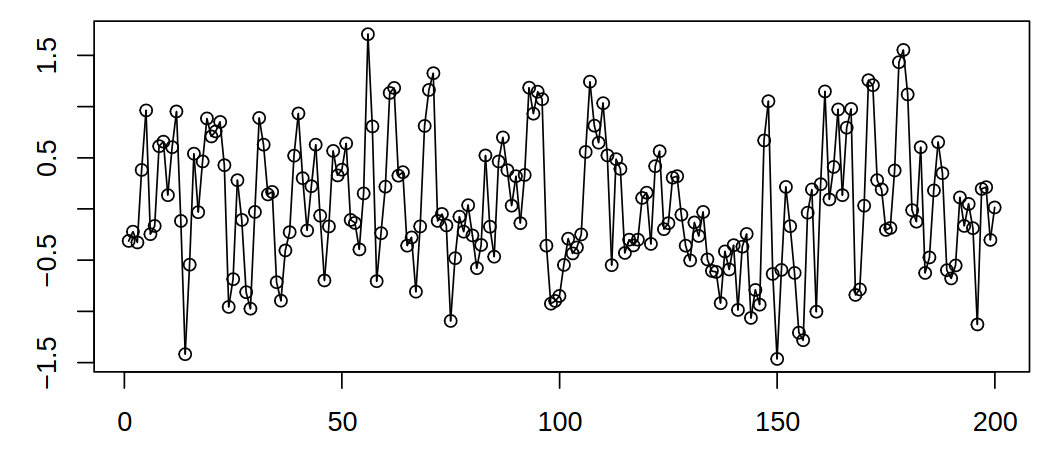

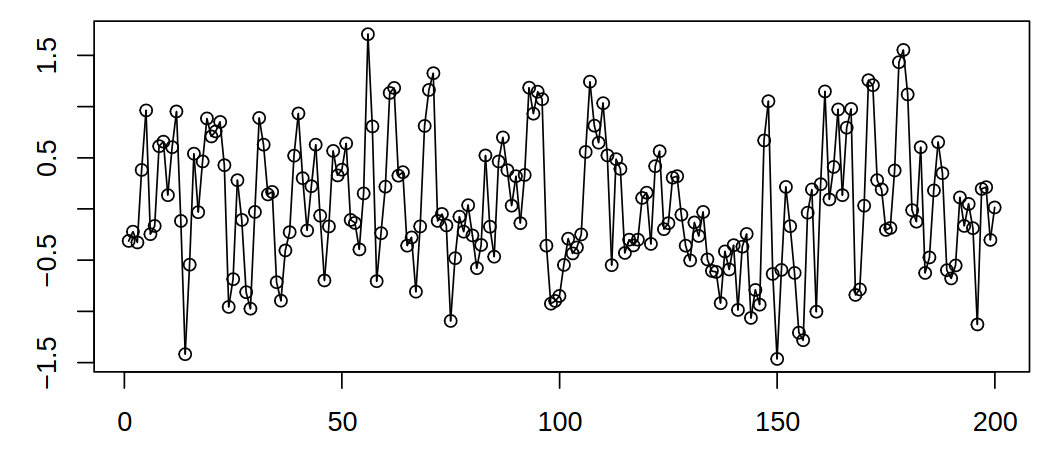

Moving average

Let \(\set{\rve_t : t \in \Z}\) be \(\WN{0}{\procVar}\) and define \[

\rnd X_t = (\rve_{t} + \rve_{t-1})/2.

\]

Then the mean function is given by \[

\procMean_t = \Expect{\rve_t +\rve_{t-1}}/2 = 0,

\] and the variance by \[

\acov(t,t) = \Variance{(\rve_t + \rve_{t-1})/2} = \frac {\procVar} 2.

\] Then autocovariance of the process is \[

\begin{gathered}

\acov(t, t+1) = \cov \brackets{(\rve_t + \rve_{t-1})/2, (\rve_{t+1} + \rve_t)/2} = \frac{\procVar} 2, \\

\acov(t, t+h) = \cov \brackets{(\rve_t + \rve_{t-1})/2, (\rve_{t+h} + \rve_{t+h-1})/2} = 0, \quad \absval{h} > 1

\end{gathered}

\] and lastly, the autocorrelation can be expressed as \[

\acf(s,t) = \begin{cases}

1, & s = t, \\

0.5, & \absval{s-t} = 1, \\

0, & \absval{s-t} > 1,

\end{cases}

\] so in other words, we have a correlation between consecutive values, which is constant in time.

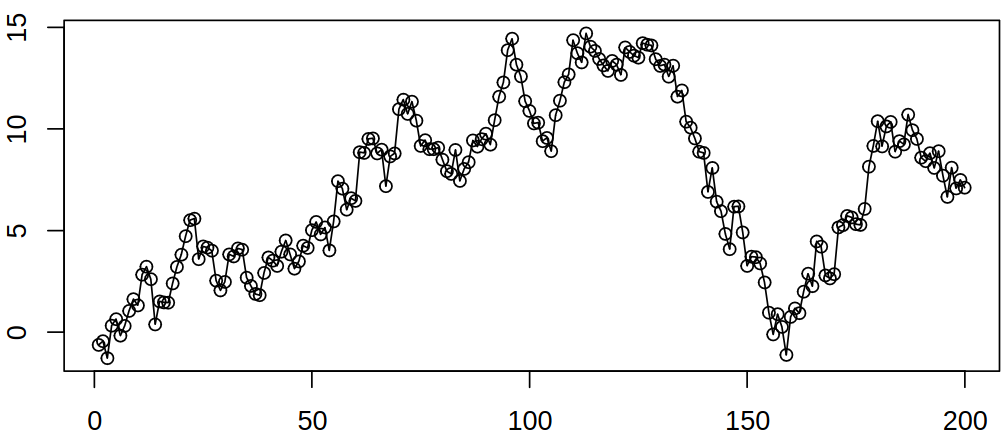

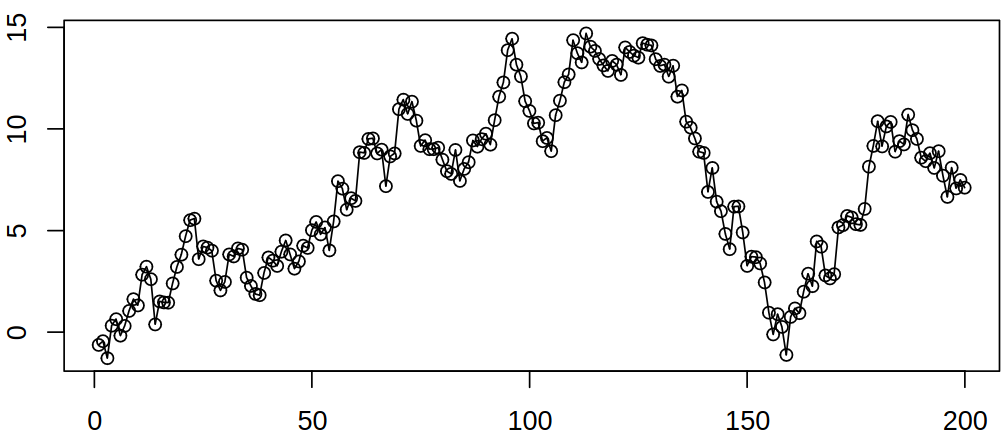

Random walk

Random walk is a stochastic process that emerges from the cumulative sum of white noise. Let \(\rve_1, \rve_2, \dots\) be a sequence of independent, identically distributed random variables with mean \(0\) and variance \(\procVar\). Then define \[

\rnd X_t = \sum_{j = 1}^t \rve_j,\; t = 1,2, \dots

\] or in other words \[

\rnd X_1 = \rve_1, \quad \rnd X_{t+1} = \rnd X_t + \rve_t, \; t = 2,3, \dots,

\] where \(\rve_t\) are the steps taken by the “random walker”, \(\rnd X_t\) is his position at time \(t\).